CUPERTINO, Calif. — Apple’s iOS 16 update for iPhones, expected this fall, will expand worldwide a controversial “communications security” tool that will use proprietary AI to detect nudity in text messages.

The worldwide expansion of this “message analysis” feature, currently available only in the U.S. and New Zealand, will begin in September when iOS 16 is rolled out to the general public.

Phone models prior to the iPhone 8 will not be affected; for Mac devices, the Ventura update will offer this option.

The nudity detection feature has been touted by Apple as part of its Expanded Protections for Children initiative, but privacy advocates have raised questions about the company’s overall approach to private content surveillance.

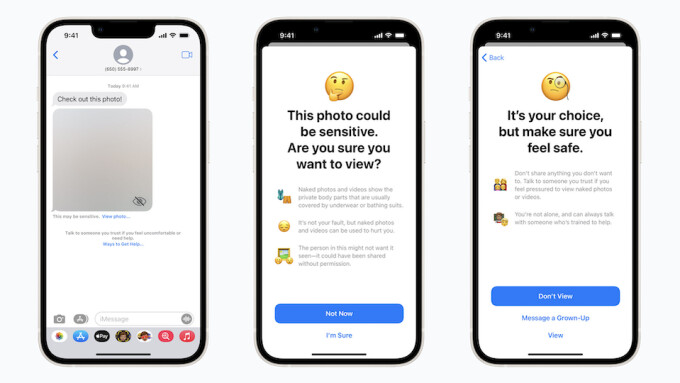

Apple describes the feature as a tool to "warn children when receiving or sending photos that contain nudity.”

The feature, Apple notes, is not enabled by default: “If parents opt in, these warnings will be turned on for the child accounts in their Family Sharing plan.”

When content identified as nudity is received, “the photo will be blurred and the child will be warned, presented with helpful resources, and reassured it is okay if they do not want to view this photo. Similar protections are available if a child attempts to send photos that contain nudity. In both cases, children are given the option to message someone they trust for help if they choose.”

The AI feature bundled with the default Messages app, the company explained, “analyzes image attachments and determines if a photo contains nudity, while maintaining the end-to-end encryption of the messages. The feature is designed so that no indication of the detection of nudity ever leaves the device.”

According to Apple, the company “does not get access to the messages, and no notifications are sent to the parent or anyone else.”

In the U.S. and New Zealand, this feature is included starting with iOS 15.2, iPadOS 15.2 and macOS 12.1.

As French news outlet RTL noted today when reporting the expansion of the feature, “a similar initiative, consisting of analyzing the images hosted on the photo libraries of users’ iCloud accounts in search of possible child pornography images, had been strongly criticized before being dismissed last year.”

As XBIZ reported, in September 2021 Apple announced that it would “pause” that initiative. The feature would have scanned images on users’ devices in search of CSAM and sent reports directly to law enforcement.