LOS ANGELES — Part of the bold promise of virtual reality is going beyond mere watching, to actually touching and interacting with elements of the VR world — breaking the fourth wall to transcend static television-style viewing.

This will not only be integral to future game play, but will unlock a flood of adult-oriented offers that in contrast to today’s VR porn will truly immerse viewers in the fantasy of the experience.

For example, imagine a VR scene where instead of the model removing her lingerie, the viewer could strip her clothes off him- or herself — a role reversal where today the viewer is an avatar, but tomorrow the model will be the avatar in a live video mix — and responding to a viewer’s hand gestures in real time.

The technology that would enable this is complex to say the least.

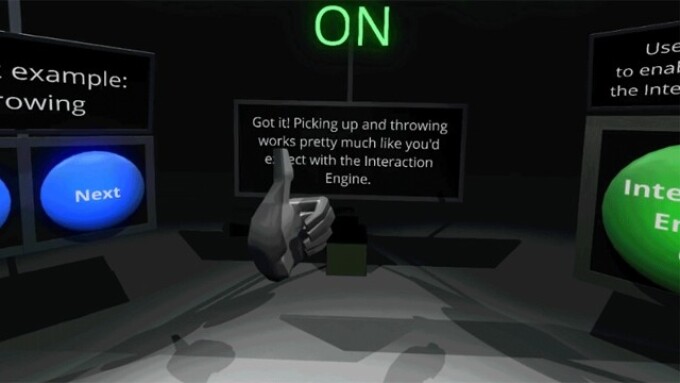

Enter Leap Motion, which today announced the beta release of its Interaction Engine that offers hand controls for VR applications based on the popular open source Unity engine.

According to the company, physics engines were never designed for human hands, so when users bring their hands into the VR space, the results are unpredictable.

“Grabbing an object in your hand or squishing it against the floor, you send it flying as the physics engine desperately tries to keep your fingers out of it,” says a Leap Motion spokesperson. “But by exploring the grey areas between real-world and digital physics, we can build a more human experience. One where you can reach out and grab something — a block, a teapot, a planet — and simply pick it up. Your fingers phase through the material, but the object still feels real. Like it has weight.”

An enormously complex challenge, the Leap Motion team has eased the process with the new module, which is now available as part of Unity Core Assets, providing a layer between the Unity game engine and real-world hand physics.

“To make object interactions work in a way that satisfies human expectations, it implements an alternate set of physics rules that take over when your hands are embedded inside a virtual object. The results would be impossible in reality, but they feel more satisfying and easy to use,” the spokesperson explains, noting that the Interaction Engine is designed to handle object behaviors, as well as to detect whether an object is being grasped. “This makes it possible to pick things up and hold them in a way that feels truly solid. It also uses a secondary real-time physics representation of the hands, opening up more subtle interactions.”

Currently an early beta release, the Interaction Engine is effective with cubes and spheres ranging from 1-2 inches in size, with ongoing development set to raise the bar.

Developers can download the module, access the documentation and view demo clips here.